The AI Pause is Bullshit

Without consensus on action, ethical guide-rails and policy response it's simply delaying the inevitable

Yes, AI will change everything. But this isn’t a plan

In 15 years of writing I never used an expletive in any of my publications before - that tells you where I sit on this issue. Sorry Dad…

Last month The Future Institute, supported by the likes of Elon Musk, Steve Wozniak, Yuval Noah Harari, Rachel Bronson, and more, published a letter calling for the pause of the development of Artificial Intelligence based on the rapid progress being made in generative AI learning models like OpenAI’s GPT, Midjourney, Dall-E, and so forth. It makes all the right statements, but without a real call to action on policy, it is just delaying the inevitable issue we are facing around AI

Now, to be fair, I did sign the letter also because I agree with the sentiments, but it’s not going to change anything

Many people are opposed to the development of AI full stop. They think it is irresponsible to launch algorithms that could actively manipulate public opinion and behavior. This is not an idle threat, OpenAI (the creators of ChatGPT), Stanford University, Georgetown University, Oxford University, The Public Institute and many others have demonstrated that bots and large language models already have had material effects on society and public opinion. That is not at question.

Like any advanced technology understood by so few, the potential for AI tech to be used for nefarious means is a glaring possibility, and we need to fast track action on how to integrate AI into society as it starts transforming corporations into human-free zones.

The Market Just Doesn’t Care

I firmly believe that

gets it in respect to the existential threat of AI despite the fact he's actively involved in creating multiple AI platforms. I think most AI practitioners probably get it. But understanding the threat and actively working to prevent the threat are two very different purposes.The greatest problem AI has is the amorphous, mysterious and yet fundamental “market” concept. For decades we’ve touted productivity as one of the core measures of modern economic progression - the ability for us to improve the output of our economies based on the traditional labor force. 15 years ago, Marc Andreessen wrote cogently on “Why Software is Eating the World”, arguing that software-based companies were about to take over “large swathes of the economy”.

Ultimately the “market” rewards technology for disrupting markets and improving productivity. It rewarded Utilities, Oil and Steel companies during the early industrial age, it rewarded the airlines, consumer goods and ‘tronics’ companies of the 60s for transforming our everyday lives, it rewarded dotcoms during the internet boom, and it will absolutely reward AI-based companies for replacing humans with AI.

The market will never tell AI companies to take a ‘pause’ from generating differentiated returns for shareholders. No chance in hell.

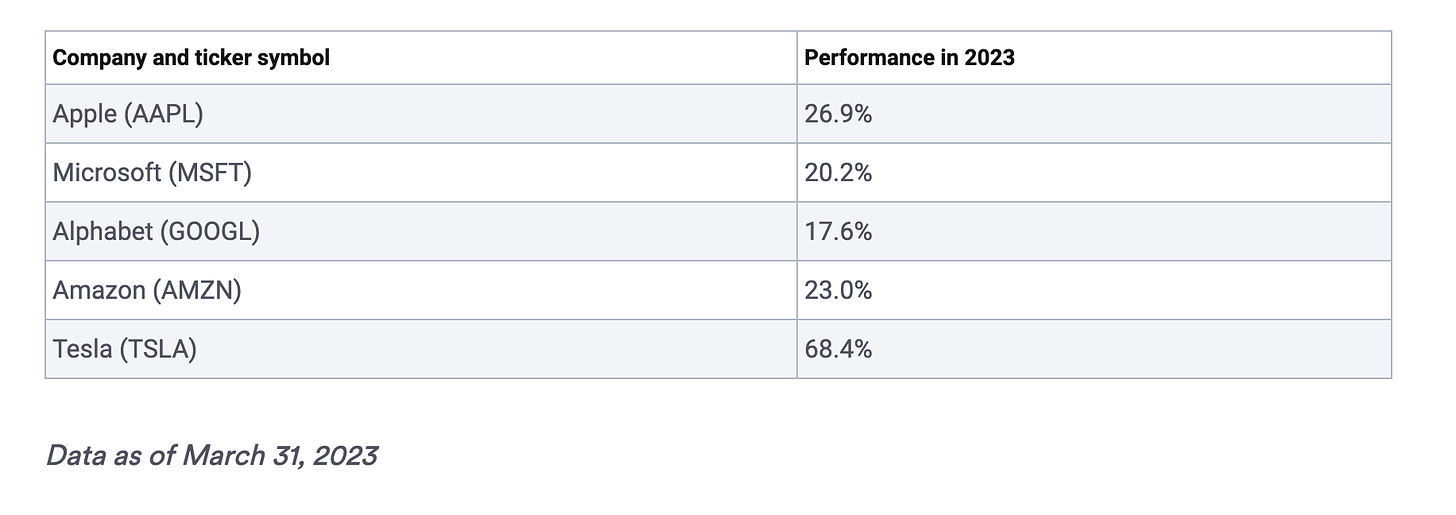

In 2023 most of the gains in the stock market have come from technology players. The same was true during the pandemic, and indeed since Andreessen wrote his 2011 article we’ve seen tech players become amongst the most valuable corporations on the planet, with all of the below players becoming the first Trillion dollar corporations.

When it comes to AI, investors will not be denied their returns! By the end of next decade all of the most valuable corporations in the world, the most valuable economies in the world, will be those that have deployed AI for widespread use. Bar none. If you want to compete in the 21st century you’re going to need to invest broadly in digital infrastructure starting with core AI capabilities. So, the market will not say no to AI… ever, regardless of the outcomes. That’s why the pause won’t work in America to reign in AI - they’ll just be waiting for the market to reopen.

That leaves Government regulation.

Right now the fight for a dominant Smart Economy of the 2050s sees an ideological battle between Chinese and US systems. China already has the most extensive AI-based regulation at a national level.

“The country has a number of broader schemes in place to stimulate the development of the AI industry, such as Made in China 2025, the Action Outline for Promoting the Development of Big Data (2015), the Next Generation Artificial Intelligence Development Plan (2017). In recent years, China has also fastened the pace to promulgate specific policies to regulate AI, regarding industry ethics and algorithms.”

AI in China: Regulations, Market Opportunities, Challenges for Investors (Source: China Briefing)

In fact, despite many declarations of intent, the US is still yet to enact a single piece of AI-relevant legislation or even uniform guidelines on the development of AI. Why? Because traditionally the US does not get involved in reigning in the free market unless it is to reign in competition against incumbent industries or it has demonstrated significant risks to the public. I mean, even meaningful gun control legislation is absent the US due to the lobbying efforts of the National Rifle Association (NRA) over 4 decades.

LLMs won’t be AGI, but it doesn’t matter

The debate over whether LLMs like ChatGPT or GPT-4 could become self-aware or sentient, or become the world’s first Artificial General Intelligence are also a waste of time. The answer is they wont - become sentient or AGIs. An AGI, by definition, will be not just an AI that can converse or pass the Turing Test, but an AI that could replicate anything a human can do - it’s human equivalent intelligence, yes. An intelligent human can do many things apart from just have a conversation.

Thus, GPT-5 or some similar type of LLM might indeed be absolutely convincing in a conversation; you may not even know you are speaking to an AI (at least without regulation that requires AIs be identified in everyday interactions). You simply won’t be smart enough to discern that the voice on the end of the phone is an AI - the AI will be able to convince us it is sentient without any reasoning capabilities. We are still some time off from an AGI that can compose a sonnet, learn to drive a car, fly a plane, learn to perform surgery, can translate any language, can debate human philosophy, or basically learn any trade or vocation (physical constraints recognized).

None of this matters. AI is already outperforming humans at a range of activities and the scope of tasks and jobs that AI can do will grow very rapidly indeed. An AGI that can learn a wide range of human abilities, doesn’t need to exist for AI to transform our markets, labor markets in particular. Thus, the conversation about AGI is a distraction too. The impact of AI doesn’t need AGI to be material to human civilization.

What we actually need

I find authors like Gary Marcus to be frustrating. Almost militant calls for a pause on AI LLM development are great soundbites if you want to sell books and TV time, but unless you are actively involved in a movement to regulate AI, you are just noise, and the Market simply doesn’t care.

What we actually need is guide-rails for the ethical operation of AI. We also need a working plan for what level of technology-based unemployment we are willing to accept before we either tax robots and algorithms (to fund human labor force displacement & mitigation action), provide support like Universal Basic Income, and workforce development programs looking at alternate use of human labor outside of the mainstream market. The main thing we need is laws of AI, like Asimov’s 3 laws of robotics. We need to know what we will let AIs takeover within our society, and it will need to be progressively done in stages

Some laws and guide-rails are pretty straightforward - an AI should not kill or bring to harm a human, this much Asimov gets right. But if you’re arguing whether a robot doctor should be allowed to perform an abortion, consensus on ethics might not even be possible in the medium term. In fact, much of where we need AI-based regulation will require large scale consensus based policy setting, at a time when politicians around the world are making their entire platforms on not getting to consensus on critical issues like climate change, immigration, access to healthcare, inequality and the role of corporations in government policy.

AI is a reflection of human nature for now

Large language models, and the massive data pools that generative AI use for machine learning, are based on data and behavior of humans interacting with the digital world today. As such we see early AI plagued by biases, learning vile, racist language, and even risking human lives.

However, we also see AIs detecting cancer and disease with greater accuracy than human doctors, we see new advances in medicines beyond current research, we see detection of patterns in research broadly that have escaped our best thinkers. Soon we will see AI that drive better than humans, that are better at surgery and diagnosis than humans, that are better at teaching us than human experts, that can compete with filmmakers, artists, writers and philosophers creatively. We will see massively more efficient forms of government, smart cities, and we will learn more about the universe than our current lines of research would uncover for decades or more.

The potential benefits AI bring to humanity are too numerous to argue for, but the risks are insane if not regulated. For this reason we need the following:

We need broad ethical codes of conduct for AI that go above and beyond political debate. These are the only guide-rails that matter.

We need a progressive integration plan with agreed upon plans of action when AI disrupts human labor, and we need to figure out how to get AI to pay for that.

We need regulation of AI at a global level, not federal. We’ve already seen social media networks have negative global impact where some localized regulation limited damage locally, but not broadly.

We need new regulatory bodies that govern the deployment of AI at an infrastructure level in particular, but broadly in respect to governance, energy, finance, healthcare and public information.

All of this requires not a call for a pause on development of AI (although a pause to compliance would be effective), but an acceleration of the acceptance that AI will disrupt humanity, and the market will reward those disruptions with massive financial gains. Debating whether AI is going to create negative outcomes, kill innocents or ‘take our jobs’ is, frankly, a waste of time. A pause does nothing to get us to an outcome where AI can be used beneficially. Unless you are going to ban AI forever, the pause is just delaying the inevitable.

What we need is a plan. We need consensus on how AI will be allowed to operate. For now that is enough…